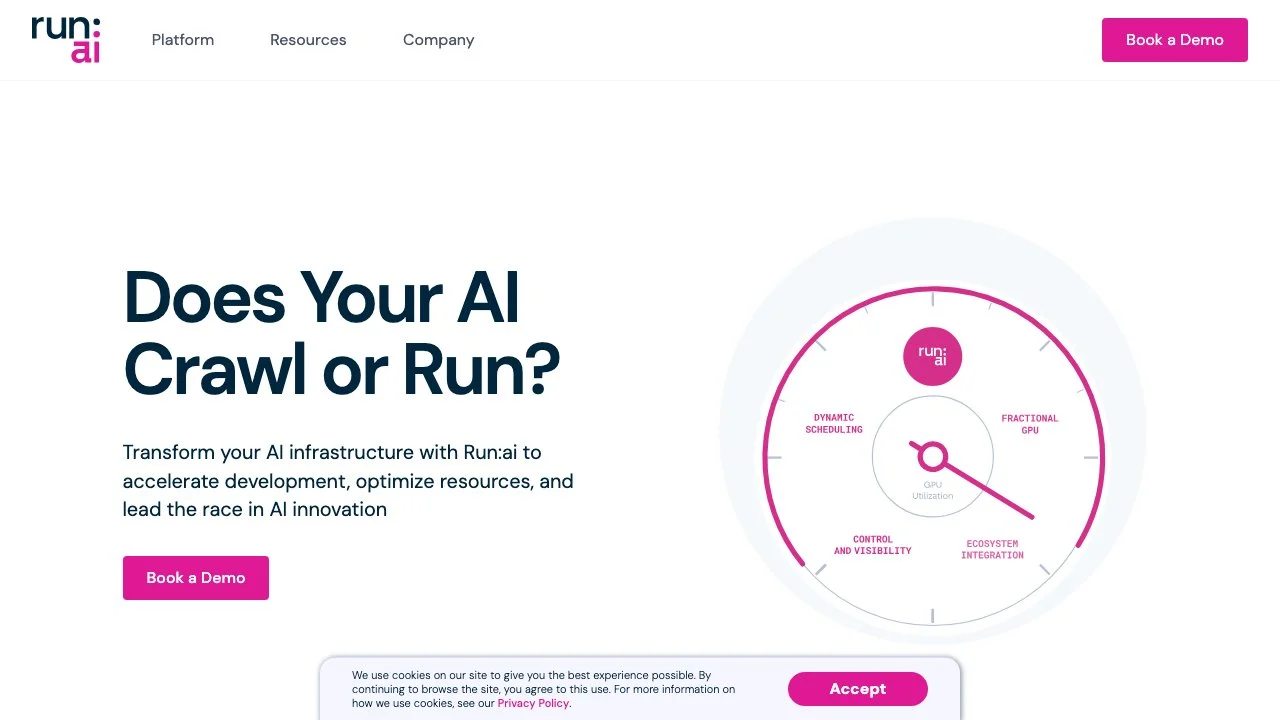

Run:ai stands at the forefront of AI optimization and orchestration, offering a comprehensive platform designed to accelerate AI development and optimize resource utilization. With its specialized focus on GPUs, Run:ai ensures that your AI initiatives are always leveraging the latest technological breakthroughs. The platform provides a seamless integration of CLI & GUI workspaces, tools, and open-source frameworks, making it a versatile choice for developers and researchers alike.

At the core of Run:ai's offerings is the Cluster Engine, a robust solution built to speed up AI initiatives and lead the race in AI innovation. The AI Workload Scheduler optimizes resource management throughout the AI lifecycle, while GPU Fractioning increases the cost efficiency of Notebook Farms and Inference environments. Node Pooling allows for the control of heterogeneous AI Clusters with quotas, priorities, and policies, ensuring that your resources are utilized to their fullest potential.

Run:ai's platform is designed for maximum efficiency, enabling up to 10x more workloads on the same infrastructure through dynamic scheduling, GPU pooling, and GPU fractioning. It also offers secured and controlled environments with fair-share scheduling, quota management, priorities, and policies. Full visibility into infrastructure and workload utilization across clouds and on-premise deployments is provided through overview dashboards, historical analytics, and consumption reports.

Deploying on your own infrastructure is made simple with Run:ai, whether it's on the cloud, on-premise, or air-gapped. The platform supports any ML tool & framework, Kubernetes, and any infrastructure, including GPU, CPU, ASIC, storage, and networking. This flexibility ensures that Run:ai can meet the diverse needs of AI development teams.

Run:ai also introduces a new AI Dev Platform that is simple, scalable, and open. It offers notebooks on demand, allowing users to launch customized workspaces with their favorite tools and frameworks. Training & fine-tuning are streamlined with the ability to queue batch jobs and run distributed training with a single command line. Private LLMs can be deployed and managed from one place, further enhancing the platform's utility.

Innovation is at the heart of Run:ai, with testimonials from industry leaders highlighting the platform's impact on accelerating AI development and time-to-market. The partnership with NVIDIA offers the world’s most performant full-stack solution for DGX Systems, showcasing Run:ai's commitment to leading-edge technology.

From containing the cold start challenge in AI inference to expanding AI infrastructure with enhanced features and open-source innovation, Run:ai is dedicated to pushing the boundaries of what's possible in AI development. With a comprehensive suite of resources, including blog posts, white papers, case studies, and documentation, Run:ai is not just a platform but a partner in your AI journey.