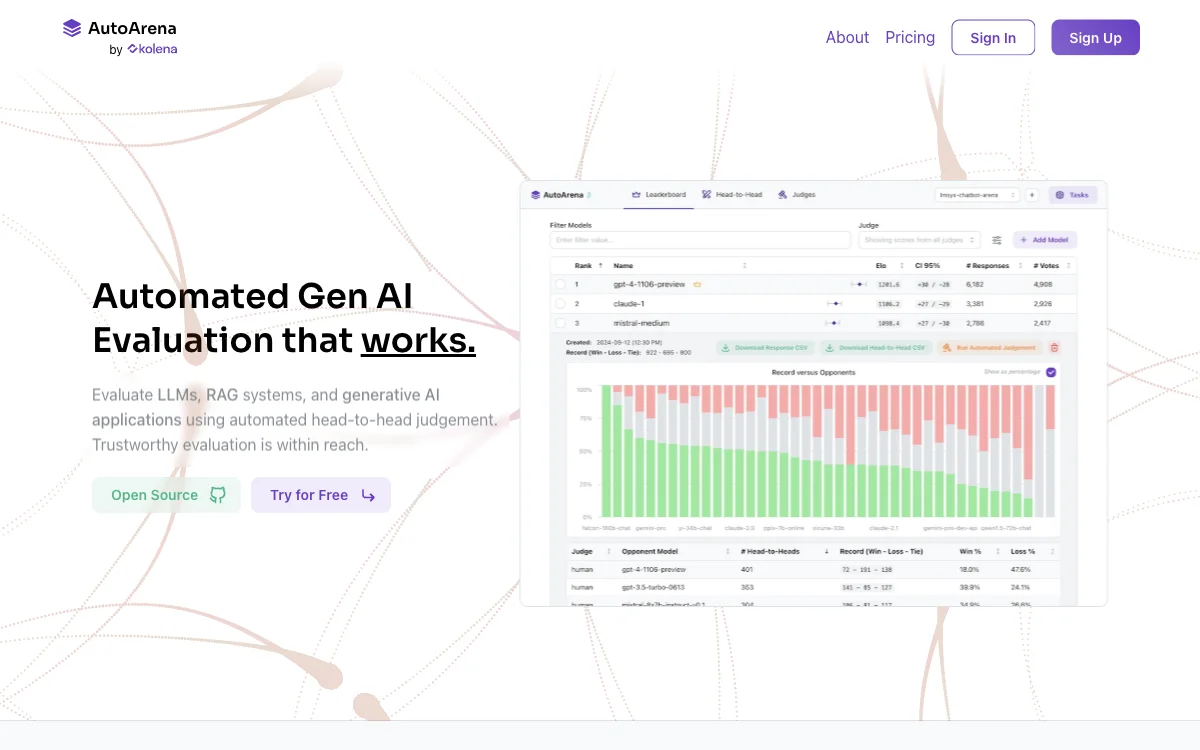

AutoArena revolutionizes the way we evaluate generative AI applications by offering a fast, accurate, and cost-effective solution. This platform leverages automated head-to-head judgement to assess the performance of Large Language Models (LLMs), Retrieval-Augmented Generation (RAG) systems, and other generative AI applications. By utilizing judge models from leading AI providers such as OpenAI, Anthropic, Cohere, Google, and Together AI, AutoArena ensures trustworthy and reliable evaluation results.

The platform employs the LLM-as-a-judge technique, which has been proven effective in pairwise comparisons, offering more accurate assessments than single-response evaluations. AutoArena supports both proprietary APIs and open-weights judge models, allowing for flexible and comprehensive testing scenarios. Users can transform multiple head-to-head votes into leaderboard rankings by computing Elo scores and Confidence Intervals, providing a clear overview of system performance.

AutoArena's innovative approach includes the use of "juries" of LLM judges, which enhances the evaluation signal by combining the insights of multiple smaller, faster, and cheaper judge models. This method not only increases reliability but also reduces costs and evaluation time. The platform handles complex tasks such as parallelization, randomization, correcting bad responses, retrying, and rate limiting, freeing users from these technical burdens.

To minimize evaluation bias, AutoArena encourages the use of judge models from different families, such as GPT, Command-R, and Claude. This diversity ensures a more balanced and fair assessment of generative AI systems. Additionally, the platform offers features for fine-tuning judge models, enabling more accurate and domain-specific evaluations. Users can collect human preferences through the head-to-head voting interface, which can be leveraged for custom judge fine-tuning, achieving significant improvements in human preference alignment.

AutoArena is designed for seamless integration into the development workflow, offering capabilities to evaluate generative AI systems in Continuous Integration (CI) environments. It can automate the detection of bad prompt changes, preprocessing or postprocessing updates, and RAG system modifications, ensuring that only the best versions of your system are deployed. The platform also provides a GitHub bot that comments on pull requests, facilitating collaboration and feedback among team members.

Whether you prefer to run evaluations locally, in the cloud, or in a dedicated on-premise deployment, AutoArena accommodates your needs. Installation is straightforward with a simple pip install command, allowing you to start testing in seconds. For team collaboration, AutoArena Cloud offers a hosted solution, while enterprises can opt for dedicated on-premise deployments on their own infrastructure.

AutoArena's pricing model is designed to cater to a wide range of users, from open-source enthusiasts to professional teams and enterprises. The Open-Source plan provides unrestricted access to the Apache-2.0 licensed application, ideal for students, researchers, hobbyists, and non-profits. The Professional plan, priced at $60 per user per month, includes team collaboration features, access to fine-tuned judge models, and dedicated support. Enterprises can benefit from private on-premise deployments, SSO and enterprise access controls, and prioritized feature requests.

In summary, AutoArena is a comprehensive solution for evaluating generative AI applications, offering a blend of speed, accuracy, and cost-effectiveness. Its innovative use of judge models, combined with flexible deployment options and a user-friendly interface, makes it an indispensable tool for anyone looking to optimize their AI systems.