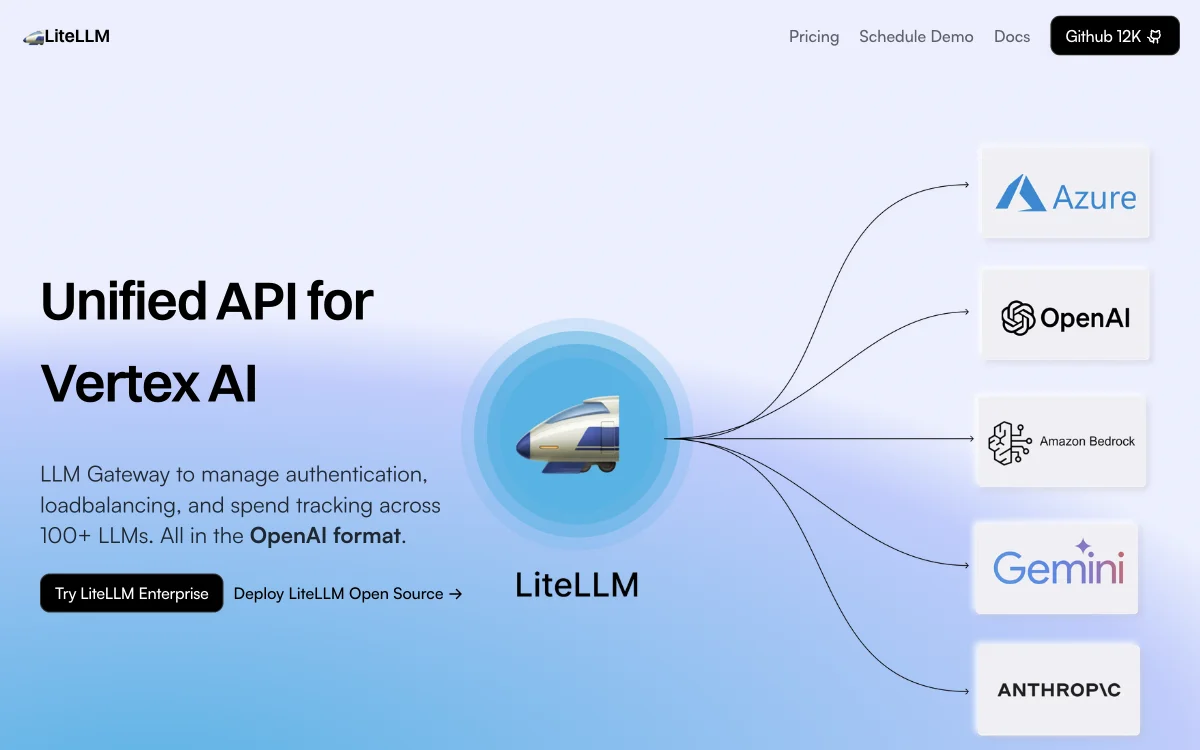

LiteLLM stands at the forefront of AI technology, providing a unified API solution for the Azure OpenAI LLM Gateway. This innovative platform is designed to simplify the complexities of managing multiple large language models (LLMs) by offering a seamless interface for authentication, load balancing, and spend tracking. With support for over 100 LLMs, LiteLLM ensures that developers and businesses can leverage the full potential of AI without the hassle of navigating through disparate systems.

One of the key features of LiteLLM is its compatibility with the OpenAI format, making it an ideal choice for those already familiar with OpenAI's ecosystem. This compatibility extends to LiteLLM's Python SDK and Gateway (Proxy), which are crafted to enhance the developer experience by providing tools for easy integration and management of LLMs.

LiteLLM's commitment to reliability is evident in its impressive track record, boasting 100% uptime, 20M+ requests served, and 90,000+ docker pulls. The platform's robust infrastructure is supported by a vibrant community of 200+ contributors, ensuring continuous improvement and innovation.

For businesses looking to scale their AI capabilities, LiteLLM offers enterprise solutions that include everything from Prometheus metrics to enterprise support with custom SLAs. Whether you're deploying LiteLLM in the cloud or opting for a self-hosted solution, the platform provides the flexibility and security needed to meet the demands of modern AI applications.

With LiteLLM, managing the complexities of LLMs becomes a streamlined process, allowing developers and businesses to focus on what truly matters - creating innovative AI solutions that drive value and impact.