The LLM Token Counter is a sophisticated tool meticulously crafted to assist users in effectively managing token limits for a diverse array of widely-adopted Language Models (LLMs), including GPT-3.5, GPT-4, Claude-3, Llama-3, and many others. This tool is essential for anyone working with generative AI technology, ensuring that your prompts fall within the specified token limits to avoid unexpected or undesirable outputs.

Why Use an LLM Token Counter? Due to the inherent limitations of LLMs, it is crucial to ensure that the token count of your prompt falls within the specified token limit. Exceeding this limit may result in unexpected or undesirable outputs from the LLM. The LLM Token Counter helps you stay within these limits, ensuring optimal performance and results from your AI models.

How Does the LLM Token Counter Work? The LLM Token Counter utilizes Transformers.js, a JavaScript implementation of the renowned Hugging Face Transformers library. Tokenizers are loaded directly in your browser, enabling the token count calculation to be performed client-side. Thanks to the efficient Rust implementation of the Transformers library, the token count calculation is remarkably fast and secure.

Data Privacy and Security One of the key features of the LLM Token Counter is its commitment to data privacy. The token count calculation is performed client-side, ensuring that your prompt remains secure and confidential. Your data privacy is of utmost importance, and this approach guarantees that your sensitive information is never transmitted to the server or any external entity.

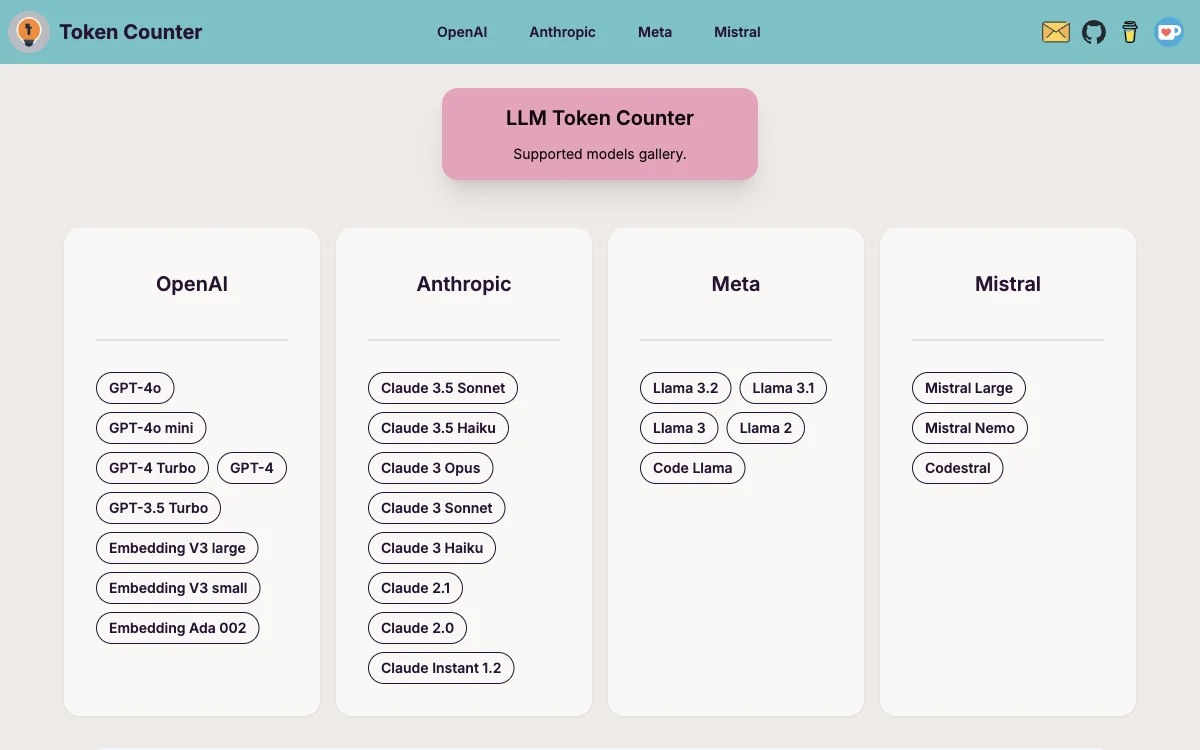

Supported Models The LLM Token Counter supports a wide range of models, including OpenAI's GPT-4, GPT-3.5 Turbo, Anthropic's Claude 3.5 Sonnet, Meta's Llama 3, and Mistral's Mistral Large, among others. The tool is continuously updated to include new models and enhance its capabilities, providing users with the most comprehensive token management solution available.

Conclusion The LLM Token Counter is an indispensable tool for anyone working with large language models. Its ability to manage token limits efficiently, combined with its commitment to data privacy and security, makes it a must-have in your AI toolkit. Whether you're a developer, researcher, or enthusiast, the LLM Token Counter empowers you to leverage generative AI technology to its fullest potential.