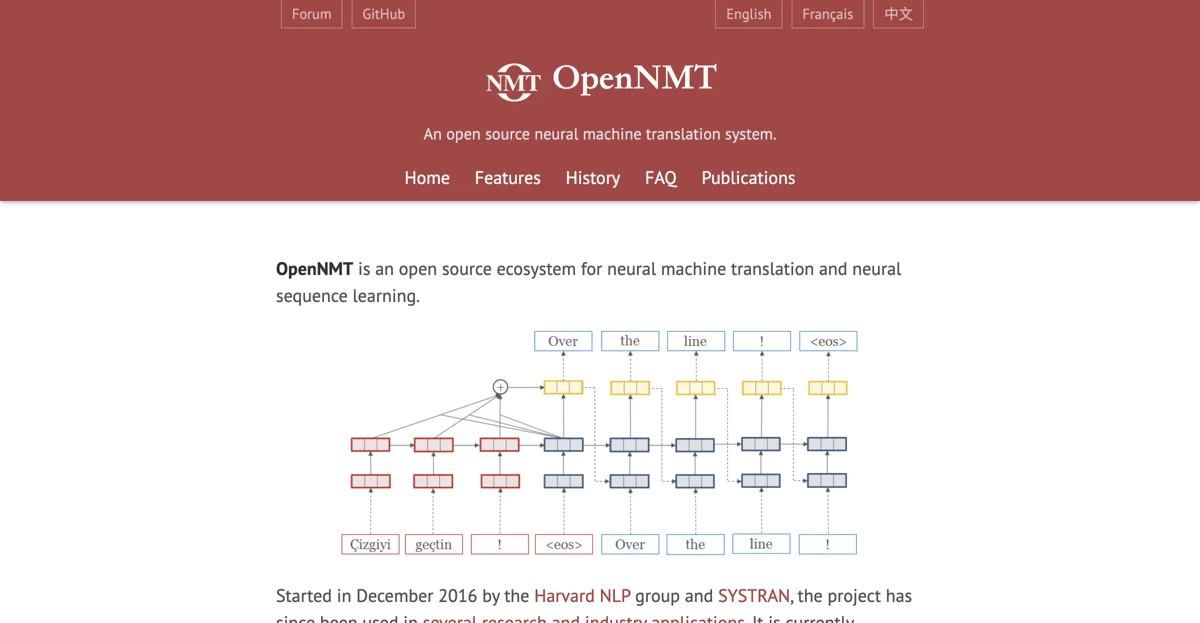

OpenNMT stands as a pioneering open-source ecosystem dedicated to advancing neural machine translation (NMT) and neural sequence learning. Initiated in December 2016 through a collaboration between the Harvard NLP group and SYSTRAN, OpenNMT has evolved into a cornerstone for both research and industry applications, currently maintained by SYSTRAN and Ubiqus. The ecosystem is designed to cater to a wide array of NMT needs, offering two primary implementations: OpenNMT-py and OpenNMT-tf.

OpenNMT-py is celebrated for its user-friendly interface and multimodal capabilities, leveraging the ease of use provided by PyTorch. It comes with comprehensive documentation and a selection of pretrained models, making it accessible for both beginners and seasoned practitioners. On the other hand, OpenNMT-tf is built on the robust TensorFlow ecosystem, offering modularity and stability. It also provides detailed documentation and pretrained models, catering to those who prefer TensorFlow's environment.

Both implementations share common objectives, including highly configurable model architectures and training procedures, efficient model serving capabilities for real-world applications, and extensions for tasks beyond translation, such as text generation, tagging, summarization, image to text, and speech to text. The OpenNMT ecosystem further enriches the NMT workflow with projects like CTranslate2, an efficient inference engine for Transformer models on CPU and GPU, and Tokenizer, a fast and customizable text tokenization library supporting BPE and SentencePiece.

OpenNMT's commitment to open-source principles is underscored by its licensing under the MIT license, encouraging widespread use and contribution. With its comprehensive documentation, pretrained models, and active community, OpenNMT continues to empower developers, researchers, and businesses in harnessing the power of neural machine translation and sequence learning.