Polymath leverages machine learning to revolutionize music production by converting any music library into a comprehensive sample library. This innovative tool automatically separates songs into stems such as beats, bass, and vocals, quantizes them to a uniform tempo and beat-grid, and analyzes musical structure, key, and other audio features. The result is a highly searchable library that significantly streamlines the workflow for music producers, DJs, and ML audio developers.

Polymath's capabilities extend to music source separation using the Demucs neural network, music structure segmentation with the sf_segmenter neural network, pitch tracking and key detection via the Crepe neural network, and music to MIDI transcription with Basic Pitch. Additionally, it employs pyrubberband for music quantization and alignment, and librosa for music information retrieval and processing.

For music producers, Polymath simplifies the process of combining elements from different songs to create unique compositions. DJs can effortlessly create polished, hour-long mash-up sets by utilizing Polymath's search capability to discover related tracks. ML developers benefit from the tool's ability to simplify the creation of large music datasets for training generative models.

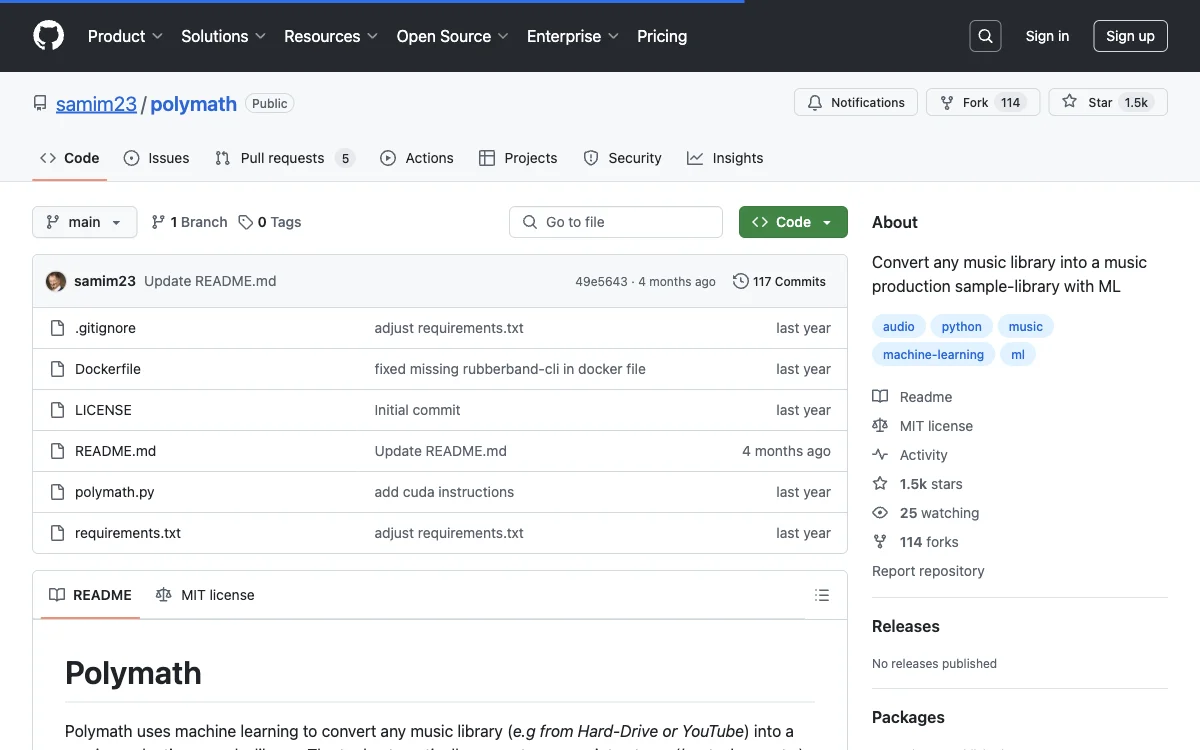

Installation of Polymath requires Python version 3.7 to 3.10 and involves cloning the repository from GitHub and installing the necessary requirements. The tool supports GPU acceleration through CUDA, enhancing performance for most of its underlying libraries. Docker setup is also available for those preferring containerized deployments, with GPU support forthcoming.

Polymath's community is active on Discord, offering a platform for users to share insights, seek assistance, and contribute to the tool's development. Released under the MIT license, Polymath is a testament to the power of open-source collaboration in advancing music technology.