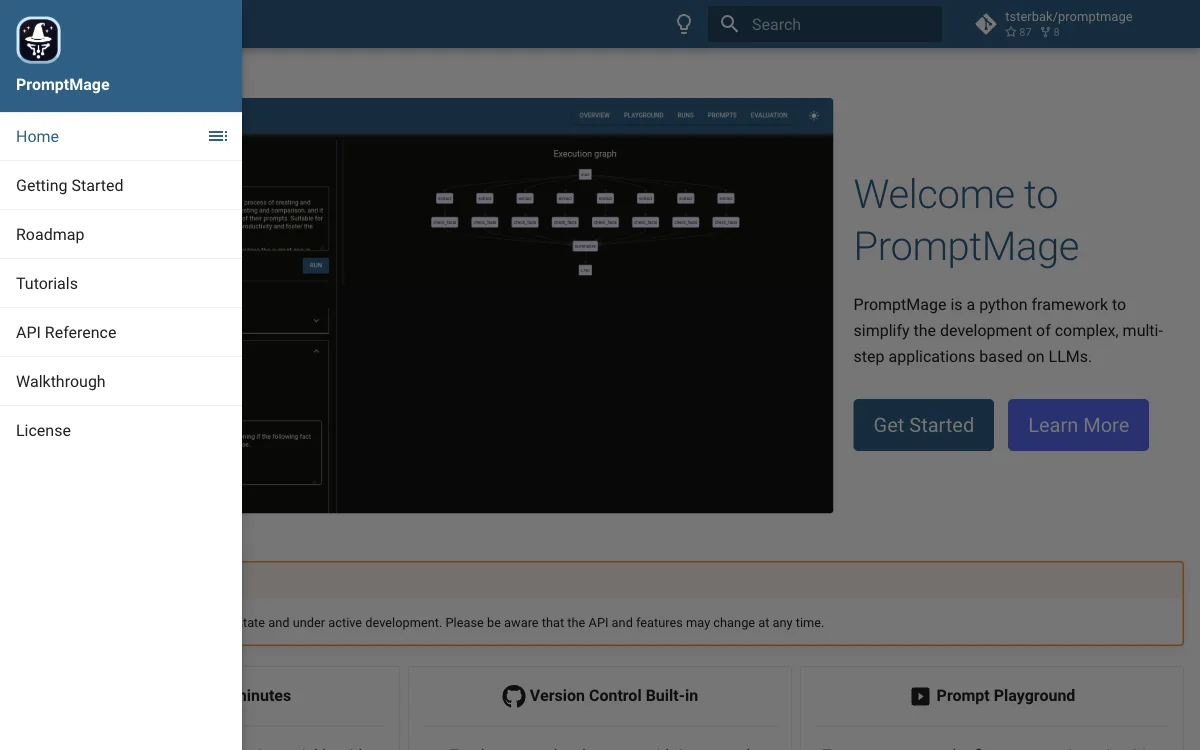

PromptMage emerges as a cutting-edge Python framework tailored for developers and researchers aiming to streamline the creation of intricate, multi-step applications powered by Large Language Models (LLMs). At its core, PromptMage is engineered to offer an intuitive interface that significantly simplifies the process of developing and managing LLM workflows, making it an ideal self-hosted solution for both small teams and large enterprises.

One of the standout features of PromptMage is its built-in version control system, which facilitates seamless collaboration and iteration among team members. This feature ensures that every change and development in the prompt creation process is meticulously tracked, thereby enhancing productivity and fostering a more efficient workflow.

Moreover, PromptMage introduces a Prompt Playground, an intuitive interface designed for rapid iteration, allowing users to test, compare, and refine their prompts with ease. This feature is particularly beneficial for developers seeking to optimize their LLM applications for better performance and reliability.

Another notable aspect of PromptMage is its auto-generated API, powered by FastAPI, which simplifies the integration and deployment process. This feature enables developers to effortlessly incorporate PromptMage into their existing systems, thereby accelerating the development cycle.

PromptMage also emphasizes the importance of evaluation, offering both manual and automatic testing modes to assess prompt performance. This ensures that applications are reliable and ready for deployment, minimizing the risk of errors and enhancing the overall quality of the LLM applications.

As PromptMage continues to evolve, it promises to introduce more features and enhancements, further solidifying its position as a pragmatic solution for LLM workflow management. By making LLM technology more accessible and manageable, PromptMage aims to empower developers, researchers, and organizations, supporting the next wave of AI innovations.