StableBeluga2 is an auto-regressive language model that has been fine-tuned on Llama2 70B. It is developed by Stability AI and is licensed under the STABLE BELUGA NON-COMMERCIAL COMMUNITY LICENSE AGREEMENT.

The model is trained on an internal Orca-style dataset. The training procedure involves supervised fine-tuning on the aforementioned datasets, trained in mixed-precision (BF16), and optimized with AdamW. Various hyperparameters such as dataset batch size, learning rate, learning rate decay, warm-up, weight decay, and betas are carefully set for the training process.

To start chatting with StableBeluga2, one can use the provided code snippet. First, the necessary libraries need to be imported. For example, import torch from the PyTorch library and from transformers the relevant classes like AutoModelForCausalLM and AutoTokenizer along with the pipeline. Then, the tokenizer is initialized using AutoTokenizer.from_pretrained("stabilityai/StableBeluga2", use_fast=False) and the model is loaded with AutoModelForCausalLM.from_pretrained("stabilityai/StableBeluga2", torch_dtype=torch.float16, low_cpu_mem_usage=True, device_map="auto"). A system prompt is defined to guide the behavior of the AI, and a user message can be provided. The input is then tokenized and sent to the model for generation. The output is decoded to get the generated text.

However, it should be noted that StableBeluga2, like other language models, has its limitations. As it is a new technology, there are risks associated with its use. The testing conducted so far has been mainly in English and has not covered all possible scenarios. Thus, the potential outputs of the model cannot be predicted with certainty in advance, and it may produce inaccurate, biased or other objectionable responses to user prompts. Therefore, developers who plan to deploy applications using this model should perform safety testing and tuning specific to their applications.

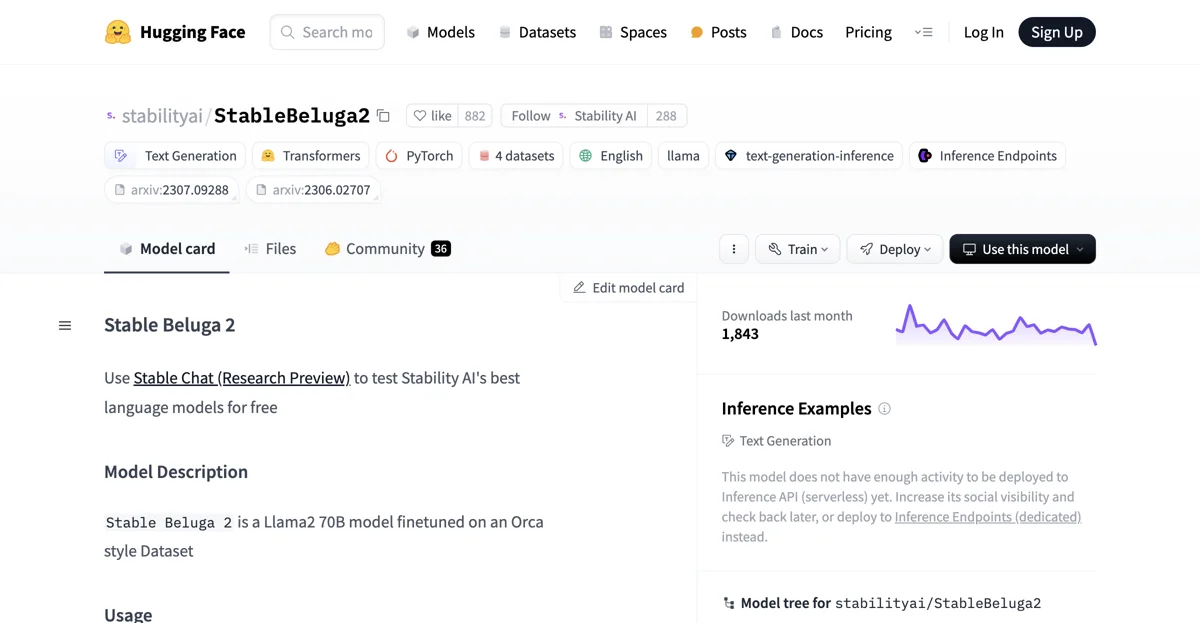

In terms of its usage and popularity, last month it had 1,843 downloads. While it does not currently have enough activity to be deployed to the Inference API (serverless), it can be deployed to Inference Endpoints (dedicated). There are also many spaces that are using StableBeluga2, indicating its growing presence in the AI community.