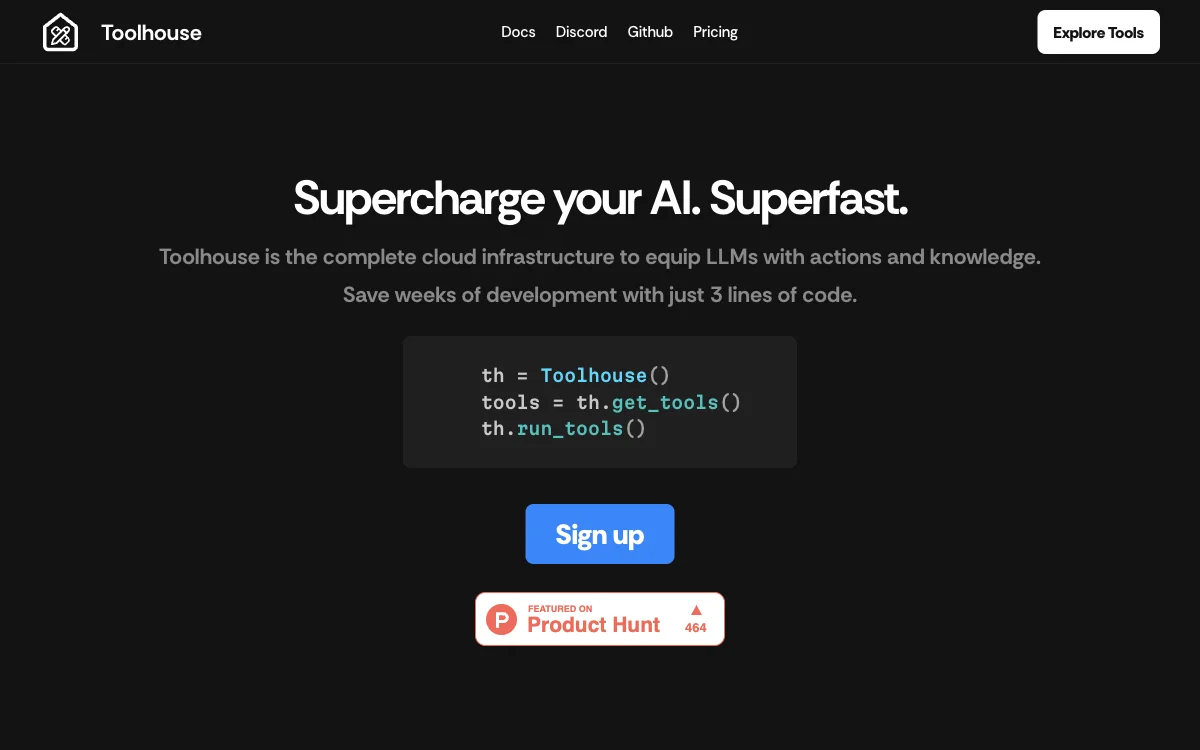

Toolhouse revolutionizes the way developers integrate AI functionalities into their applications. By offering a comprehensive cloud infrastructure, Toolhouse equips Large Language Models (LLMs) with the necessary actions and knowledge, significantly reducing development time. With just three lines of code, developers can access a wide range of tools, making the process of enhancing AI apps both simple and efficient.

The platform stands out for its low-latency cloud, which not only decreases inference times but also optimizes responses to reduce token usage. This ensures that tools are served at the edge for the best possible latency. Additionally, Toolhouse builds and optimizes prompts across various LLMs, further enhancing the performance of AI applications.

One of the key features of Toolhouse is its Tool Store, which functions similarly to an app store for tool use. Developers can install tools in their LLMs with just one click, eliminating the need to write, host, and maintain integrations. This includes capabilities such as semantic search, RAG, email sending, and sandboxed code execution.

Toolhouse's Universal SDK is another highlight, offering a flexible and reliable solution for developers. It supports plain responses or streaming with the same syntax and works across any major LLM API and AI framework. This universality, combined with the SDK's reliability, helps reduce hallucinations by grouping the right tools for the task at hand.

Security and observability are also top priorities for Toolhouse. The platform ensures that data is retrieved and stored securely on low-latency storage, and provides developers with insights into LLM inputs and tool responses. This comprehensive approach to AI application development makes Toolhouse a trusted choice among industry leaders.