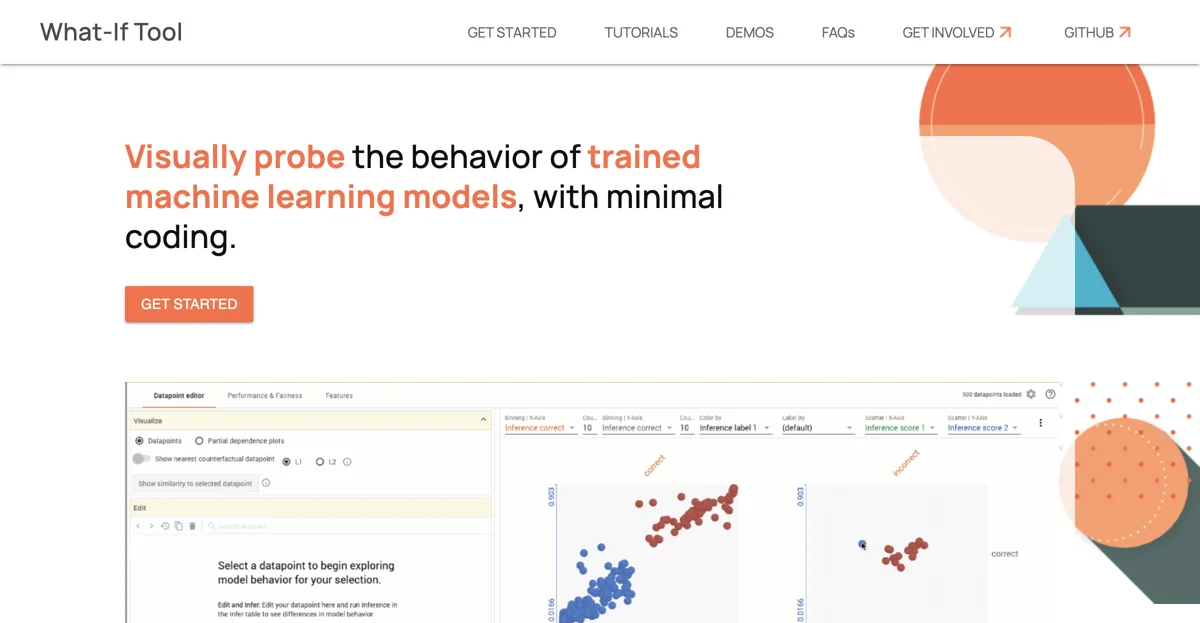

The What-If Tool stands as a pivotal innovation in the realm of machine learning, offering a comprehensive suite for visually probing the behavior of trained models with minimal coding effort. This tool addresses a critical challenge in the development and deployment of responsible Machine Learning (ML) systems: understanding their performance across a diverse range of inputs. By leveraging the What-If Tool, users can test model performance in hypothetical scenarios, analyze the significance of various data features, and visualize model behavior across multiple models and subsets of input data, alongside different ML fairness metrics.

Integration with popular platforms and frameworks such as Colaboratory notebooks, Jupyter notebooks, Cloud AI Notebooks, TensorBoard, TFMA, and Fairness Indicators, ensures that the What-If Tool is versatile and adaptable to any workflow. It supports a wide array of models and frameworks, including TF Estimators, models served by TF serving, Cloud AI Platform Models, and models that can be wrapped in a Python function. The tool is adept at handling various data and task types, including binary classification, multi-class classification, regression, and data in tabular, image, and text formats.

The What-If Tool is not just a tool but a community-driven project, open to contributions from anyone interested in developing and improving it. It encourages users to ask and answer questions about models, features, and data points, fostering a collaborative environment for advancing ML research and application. With its latest updates, new features, and continuous improvements, the What-If Tool remains at the forefront of ML research tools, enabling users to explore AI fairness, model behavior, and data analysis in unprecedented depth.