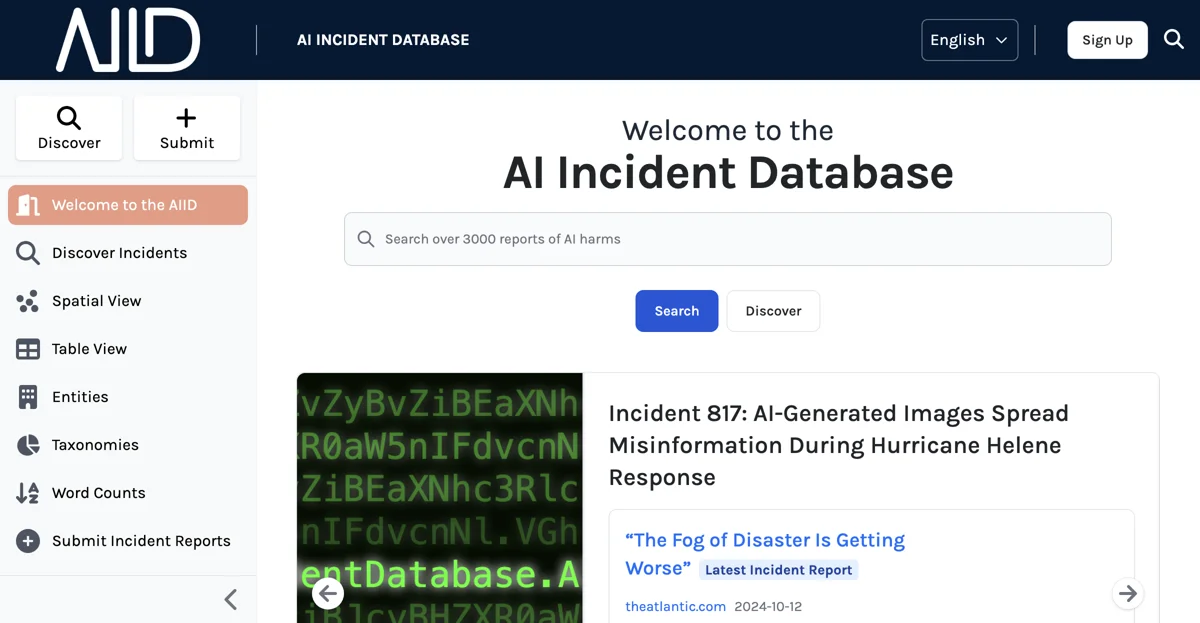

The AI Incident Database serves as a critical repository for documenting and analyzing incidents where artificial intelligence systems have caused harm or posed significant risks. This database aims to foster a deeper understanding of AI's potential pitfalls by cataloging real-world examples, much like databases in aviation and computer security that track accidents and vulnerabilities. By examining these incidents, researchers, developers, and policymakers can identify patterns and implement measures to prevent future occurrences.

The database features a variety of incidents, from deepfake misuse in media to errors in AI transcription tools affecting medical records. Each incident is meticulously documented, providing insights into the circumstances, consequences, and lessons learned. This collective history is invaluable for advancing responsible AI development and deployment.

Users are encouraged to contribute to the database by submitting incident reports. These submissions undergo a review process to ensure accuracy and relevance before being indexed and made publicly accessible. The goal is to create a comprehensive and searchable archive that can inform and guide the AI community.

The AI Incident Database is part of the Responsible AI Collaborative, an organization dedicated to promoting ethical AI practices. Through this initiative, the collaborative aims to enhance transparency, accountability, and safety in AI technologies. By learning from past mistakes, the AI community can work towards a future where artificial intelligence benefits society without unintended negative consequences.