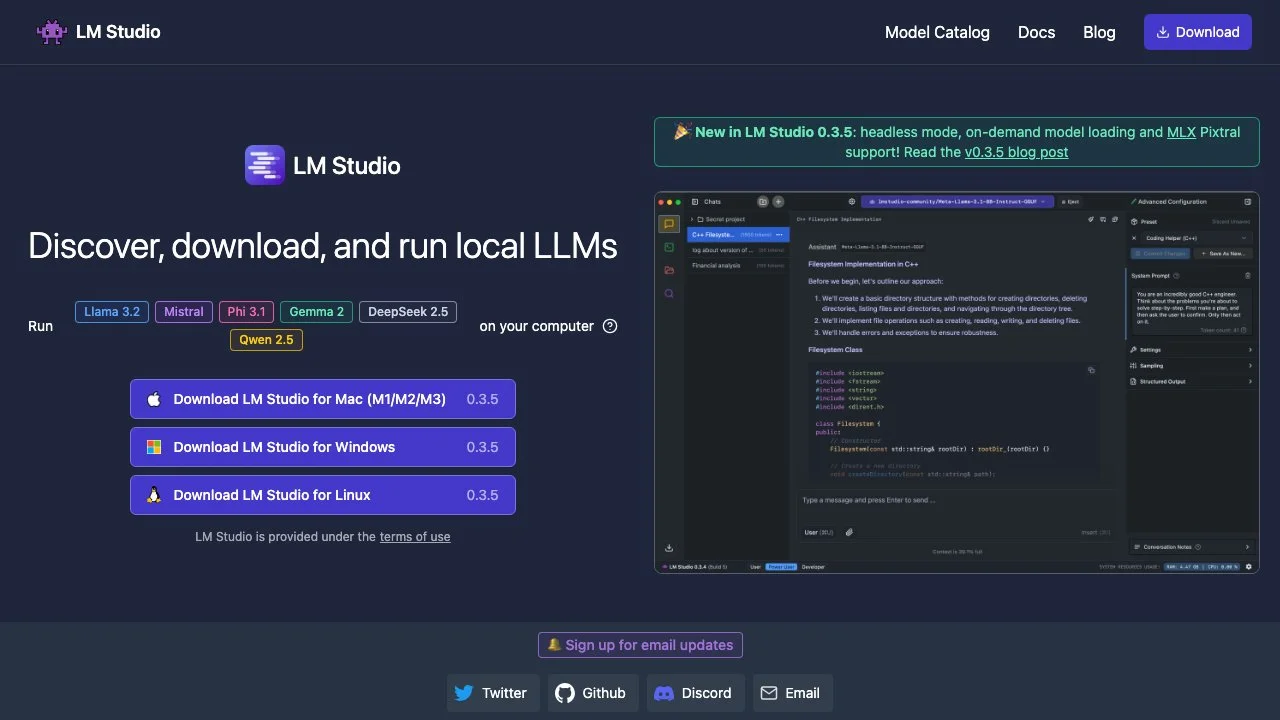

LM Studio revolutionizes the way individuals and professionals interact with large language models (LLMs) by offering a comprehensive platform for discovering, downloading, and running these models locally on personal computers. This innovative tool supports a wide range of models, including Llama, Mistral, Phi, Gemma, and more, directly from Hugging Face repositories. With LM Studio, users can enjoy the benefits of LLMs without compromising their privacy, as all operations are conducted offline, ensuring that sensitive data remains secure and local to the user's machine.

One of the standout features of LM Studio is its ability to run LLMs on laptops, making advanced AI technologies accessible to a broader audience. The platform's in-app Chat UI and OpenAI compatible local server offer versatile interaction modes, catering to different user preferences and needs. Additionally, the Discover page within the app serves as a gateway to new and noteworthy LLMs, enriching the user experience with continuous updates and discoveries.

LM Studio is designed with user convenience in mind, supporting any GGUF Llama, Mistral, Phi, Gemma, StarCoder, and other models available on Hugging Face. The platform's minimum requirements include M1/M2/M3 Macs or Windows/Linux PCs with processors that support AVX2, ensuring a wide range of compatibility. The development of LM Studio has been made possible thanks to the llama.cpp project, highlighting the collaborative effort behind this cutting-edge tool.

For those interested in leveraging LM Studio for business purposes, the platform encourages reaching out for tailored solutions. The app is free for personal use, emphasizing its commitment to making AI technologies accessible to all. With its focus on privacy, versatility, and user-friendly design, LM Studio stands out as a premier choice for individuals and professionals looking to explore the capabilities of local LLMs.