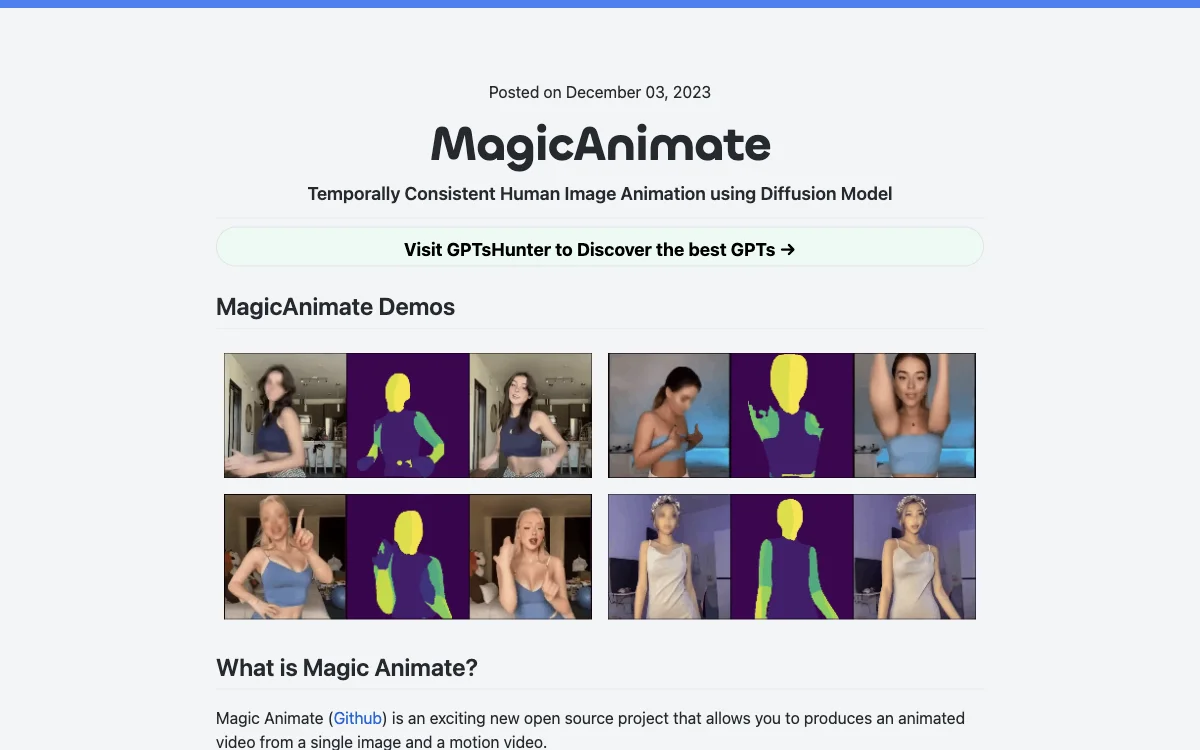

MagicAnimate represents a significant leap forward in the field of human image animation, leveraging the power of diffusion models to create temporally consistent animations from a single image and a motion video. Developed by Show Lab, National University of Singapore & Bytedance, this open-source project is designed to animate reference images with motion sequences from various sources, including cross-ID animations and unseen domains like oil paintings and movie characters.

One of the standout features of MagicAnimate is its ability to maintain the integrity of the reference image while significantly enhancing animation fidelity. This is achieved through a cutting-edge diffusion-based framework that excels in preserving temporal consistency. Moreover, MagicAnimate integrates seamlessly with T2I diffusion models like DALLE3, enabling the animation of text-prompted images with dynamic actions.

Despite its impressive capabilities, MagicAnimate is not without its limitations. Users may encounter some distortion in the face and hands, a recurring issue in animation technologies. Additionally, the default configuration may result in a style shift from anime to realism, particularly noticeable in the faces within the videos. This might necessitate modifications to the checkpoint for those seeking a consistent anime style.

Getting started with MagicAnimate requires downloading the pretrained base models for StableDiffusion V1.5 and MSE-finetuned VAE, along with the MagicAnimate checkpoints. Installation prerequisites include Python>=3.8, CUDA>=11.3, and ffmpeg. For those eager to try MagicAnimate without the setup, online demos are available on platforms like Hugging Face and Replicate. Additionally, a Colab notebook provides a straightforward way to run MagicAnimate, complete with a step-by-step guide.

MagicAnimate also offers an API for generating animated videos, allowing developers to integrate this powerful tool into their applications. The API supports various parameters, including the number of denoising steps, guidance scale, and seed for randomization, offering flexibility in the animation process.

In conclusion, MagicAnimate is a groundbreaking tool that opens up new possibilities in the realm of human image animation. Its ability to produce high-quality, temporally consistent animations from a single image and a motion video makes it a valuable asset for creators and developers alike. Despite some limitations, MagicAnimate's innovative approach and seamless integration with other models position it as a leading solution in the field of AI-driven animation.