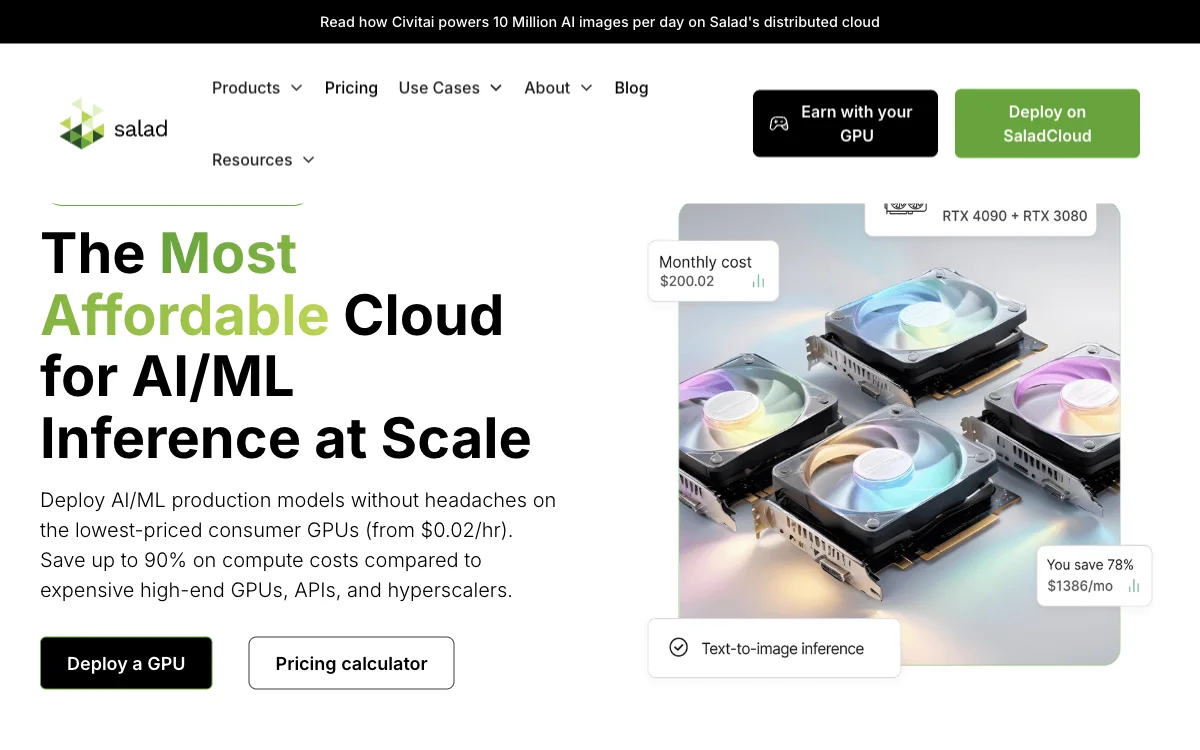

SaladCloud revolutionizes the way AI and machine learning models are deployed by leveraging a distributed network of consumer GPUs. With prices starting as low as $0.02 per hour, it provides an economical alternative to traditional cloud services, enabling users to save up to 90% on compute costs. This innovative platform supports a wide range of AI/ML production models, offering unparalleled scalability and efficiency.

SaladCloud's infrastructure is built on the world's largest distributed network, featuring over 11,000 daily active GPUs across 191 countries. This vast network ensures high availability and low latency, making it ideal for AI inference, batch jobs, and other GPU-intensive tasks. The platform's fully managed container service simplifies deployment, allowing users to focus on their core activities without the hassle of managing virtual machines or individual instances.

One of the standout features of SaladCloud is its commitment to sustainability. By utilizing idle consumer GPUs, the platform not only reduces the environmental impact of computing but also democratizes access to powerful resources. This approach aligns with the growing demand for green technology and offers a sustainable solution to the global chip shortage.

SaladCloud's pricing model is designed for flexibility and cost-effectiveness. Users can take advantage of dynamic resource allocation to scale their workloads in real time, ensuring they only pay for what they use. The platform also offers a GPU pricing calculator, enabling users to estimate their savings compared to other cloud providers.

Trusted by hundreds of innovative companies, SaladCloud has proven its value in various use cases, from AI inference to molecular simulation. Its ability to deliver high performance at a fraction of the cost makes it a preferred choice for machine learning and data science teams worldwide.

In summary, SaladCloud stands out as a leader in affordable, scalable, and sustainable cloud computing. Its unique approach to leveraging consumer GPUs offers significant cost savings and environmental benefits, making it an attractive option for businesses and researchers alike.