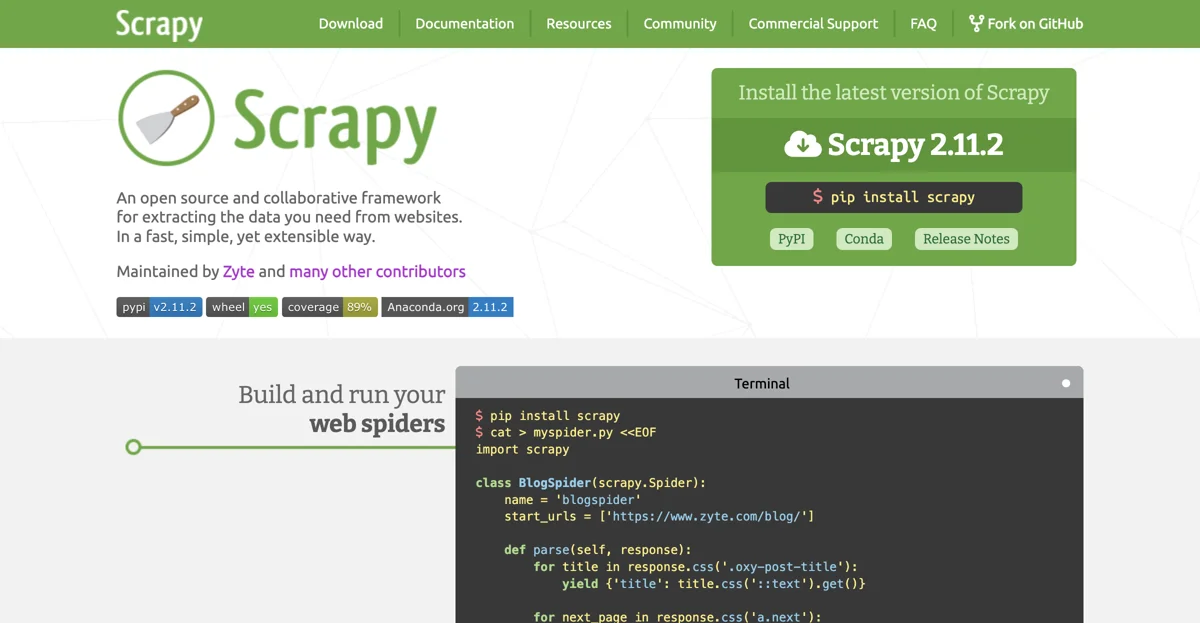

Scrapy is a remarkable open source and collaborative framework that enables users to extract the necessary data from websites in a fast, simple, and highly extensible manner. Maintained by Zyte and numerous other contributors, it has become a popular choice among those dealing with web data extraction.

To get started with Scrapy, one can install the latest version, such as Scrapy 2.11.2, using commands like pip install scrapy on PyPI or Conda. Once installed, users can begin writing spiders. For example, a simple spider can be created as follows:

import scrapy

class BlogSpider(scrapy.Spider):

name = 'blogspider'

start_urls = ['https://www.zyte.com/blog/']

def parse(self, response):

for title in response.css('.oxy-post-title'):

yield {'title': title.css('::text').get()}

for next_page in response.css('a.next'):

yield response.follow(next_page, self.parse)

This code snippet demonstrates how to extract titles from a blog website. After creating the spider, it can be run using commands like scrapy runspider myspider.py.

Scrapy also offers the option to deploy spiders to Zyte Scrapy Cloud. First, one needs to install shub and log in by inserting the Zyte Scrapy Cloud API Key. Then, the spider can be deployed using shub deploy and scheduled for execution with shub schedule. The scraped data can be retrieved using shub items.

One of the key advantages of Scrapy is its speed and power. Users only need to write the rules for data extraction, and Scrapy takes care of the rest. It is also easily extensible by design, allowing new functionality to be plugged in without modifying the core. Being written in Python, it is portable and can run on various operating systems like Linux, Windows, Mac, and BSD.

The Scrapy community is quite healthy, with a significant number of stars, forks, and watchers on GitHub, along with a large number of followers on Twitter and numerous questions on StackOverflow. This indicates the widespread usage and interest in the framework.

In conclusion, Scrapy provides a comprehensive solution for web scraping and crawling, making it an invaluable tool for those who need to gather data from the web efficiently and effectively.